In today’s digital era, deepfakes have become a serious concern for security, politics, media, and everyday users. These AI-generated videos or images manipulate reality so convincingly that it’s often hard to tell what’s real and what’s not. From fake political speeches to synthetic celebrity scandals, deepfakes are on the rise. They raise major red flags for trust and truth in online content. This guide shows how to spot deepfakes using the latest tools and methods. Stay one step ahead in the age of misinformation.

Understanding Deepfakes and Their Impact

Deepfakes use AI algorithms, especially generative adversarial networks (GANs), to create fake but realistic visuals and audio. They were first made for fun or visual effects. Now, they are often used to spread fake news, commit fraud, and manipulate public opinion.

Common Uses of Deepfakes:

- Fake news videos targeting elections or public figures

- Synthetic celebrity content (often in unethical or malicious contexts)

- Corporate scams via forged executive voices

- Social media hoaxes to mislead the public

Want to protect yourself financially in the digital world? Learn how to safeguard your money from online fraud and scam tactics.

How Deepfakes Are Created: Behind the Scenes

To better understand how to detect deepfakes, it’s important to know how they’re made. Deepfakes rely on a type of machine learning called generative adversarial networks (GANs). These networks work in two parts: a generator, which creates fake content, and a discriminator, which tries to spot the fake. The two components “compete” and improve over time, creating increasingly realistic outputs.

Training these models requires thousands of real video frames or images of a person. Once trained, the generator can produce new footage that appears authentic. This makes deepfakes dangerous, especially when used to imitate real people, including public figures and corporate executives.

Today, even open-source tools and apps allow non-experts to generate convincing deepfakes. Platforms like DeepFaceLab and FaceSwap have made creating fake videos easier than ever, increasing the threat of misuse.

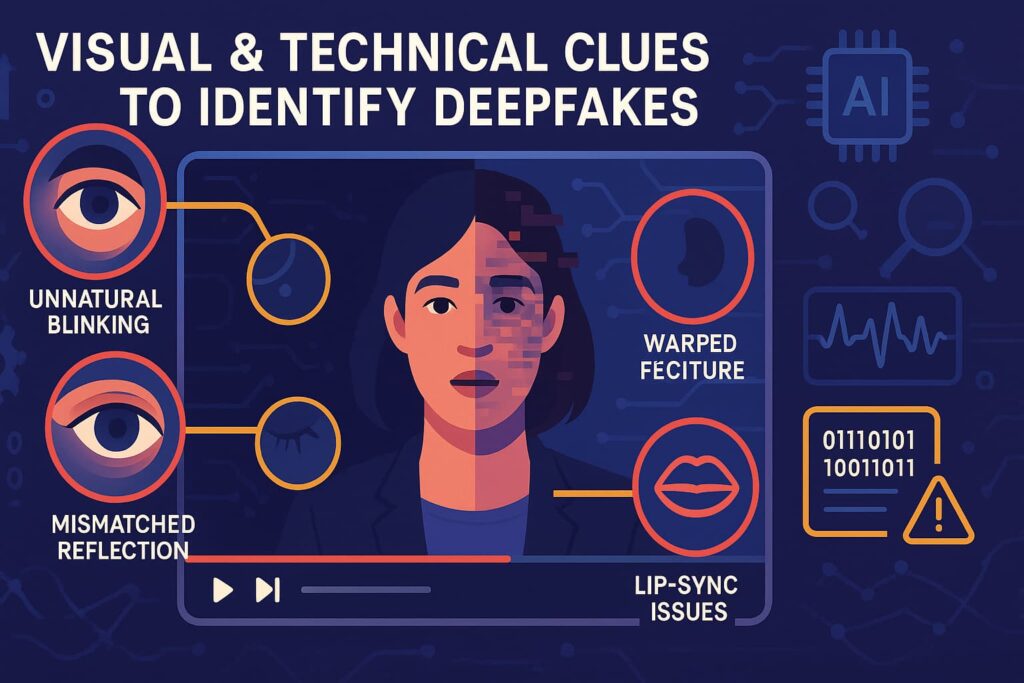

Visual & Technical Clues to Identify Deepfakes

Spotting a deepfake isn’t always easy, but with trained eyes, some tell-tale signs often emerge.

Look for These Visual Clues:

- Unnatural blinking or facial movements

- Mismatched lighting or shadows

- Blurry edges around the face

- Asynchronous audio and lip movement

Use Detection Tools and Software:

- Microsoft Video Authenticator – Analyzes image authenticity

- Deepware Scanner – Mobile app for detecting deepfake videos

- Reality Defender – A browser extension that flags manipulated media

How Social Platforms Are Fighting Back

Social media platforms play a big role in amplifying or reducing deepfakes’ reach. Many are investing in AI content moderation and real-time detection tools.

- Facebook has banned deepfakes intended to deceive or mislead.

- TikTok and Instagram now label synthetic content in some regions.

- YouTube removes manipulated content that violates its policies.

Despite these steps, the detection process is not perfect. That’s why users also need to take responsibility. Always question videos that seem too sensational or out of character. Verify with reliable news sources before sharing anything questionable.

AI Detection Tools and Techniques

As deepfakes grow more sophisticated, AI-powered tools are stepping up to fight fire with fire.

Top AI Detection Solutions:

- Sensity AI – Enterprise-grade deepfake detection using facial forensics

- Hive Moderation – Used by social platforms to flag manipulated media

- Amber Video – Adds cryptographic stamps to video at the point of recording

Research-Based Approaches:

- Eye movement tracking and head pose inconsistencies

- Deep learning models trained on real vs. synthetic data

- Blockchain authentication to prove content origin

Want to know how AI is reshaping our daily lives? Explore real-world AI applications in this MIT News breakdown of AI’s impact on everyday life.

Role of Cybersecurity Experts and Ethical Hackers

The fight against deepfakes also involves the cybersecurity community. Ethical hackers and researchers find weaknesses in AI systems. They create tools to catch deepfakes before they spread.

Security firms are beginning to offer deepfake detection as part of their digital threat protection services. Some are even using biometric analysis to detect inconsistencies in facial patterns or voice tones.

Organizations now use media forensics. This field focuses on checking digital content for signs of manipulation. This includes metadata analysis, error-level analysis, and pixel-level detection.

Global Policies and Ethical Implications of Deepfakes

The spread of deepfakes has forced international organizations and governments to take action. Their misuse impacts privacy, freedom of speech, and global security.

Ethical and Policy Challenges:

- Free speech vs. censorship

- Political manipulation using synthetic media

- Weaponization of misinformation

Explore international AI ethics standards:

Learn about UNESCO’s Recommendations on the Ethics of Artificial Intelligence. It’s the first global framework, adopted by 193 nations. It promotes values like transparency, fairness, and human dignity.

Understand global AI governance efforts:

Read the World Economic Forum’s AI Governance Alliance Briefing Paper. It shares multi-stakeholder strategies for fair and strong generative AI regulation.

Risks and Challenges of Deepfakes

Deepfakes aren’t just a technical issue, they create real-world risks.

Key Concerns:

- Loss of trust in journalism and digital content

- Cybersecurity threats (identity theft, scams)

- Deepfake revenge porn and harassment

Curious about how global tech trends are driven by policy? Explore how U.S. technology laws are setting the pace for the world.

Preventing Deepfake Abuse: The Role of Law and Society

Stopping deepfake abuse requires both strong laws and an informed society. Governments must create clear deepfake regulations to punish misuse and protect citizens. Legal frameworks should address issues like identity theft, non-consensual content, and political misinformation.

At the same time, public education is key. People need to learn how to identify deepfakes. They should understand AI manipulation. It’s also important to think critically about what they see online. Schools, media outlets, and tech platforms must work together. Lawmakers, tech companies, and civil society must work together. This collaboration can build trust and reduce harm from synthetic media.

Deepfakes on Mobile and Messaging Apps

The rise of mobile deepfake apps is another growing concern. Some apps allow users to insert their faces into popular movies or mimic celebrity voices in real-time. While these are often marketed as fun or entertainment, they also pose risks.

Such tools can be misused for harassment, trolling, or fake endorsements. Mobile devices are becoming more powerful. This makes it easier to create and share realistic deepfake content. As a result, the threat is growing.

Mobile-specific detection tools are being developed to counter this. These tools run in the background and alert users when synthetic media is detected. Expect to see more of these technologies embedded into future smartphones and messaging platforms.

Combating Deepfakes: Policy Action and Public Response

Combating deepfakes requires a multi-layered strategy involving law, tech innovation, and global awareness.

Recommended Actions:

- AI watermarking of authentic content

- Media literacy education in schools and public campaigns

- Cross-border legislation to regulate harmful deepfake use

- Partnerships with tech companies for real-time detection

- Investment in detection research and public datasets

Interested in how developing nations handle innovation and policy? Discover how rising economies are leading the next wave of tech and growth.

Deepfakes are a double-edged sword, creative in theory, dangerous in practice. Understanding how to spot deepfakes is essential in safeguarding truth and trust in a digitally mediated world. By staying informed and using the right tools, we can stop deepfakes from hurting trust, democracy, and online safety.

Let’s stay informed, remain critical, and use technology wisely to protect the truth.

One reply on “How to Spot Deepfakes”